Posts tagged community

Quick takes

Popular comments

Recent discussion

Context: These are very rough notes I took for a memo session I was presenting at the UGOR 24' retreat.

Why I think this is important:

- A good social vibe is (in my opinion) a precondition to a successful EA group.

- Having friends in your group is an incentive

This announcement was written by Toby Tremlett, but don’t worry, I won’t answer the questions for Lewis.

Lewis Bollard, Program Director of Farm Animal Welfare at Open Philanthropy, will be holding an AMA on Wednesday 8th of May. Put all your questions for him on this thread...

Are there any interventions that are specially promising to increase the fraction of philanthropic and governmental spending on animal welfare, which is currently tiny?

Not sure how to post these two thoughts so I might as well combine them.

In an ideal world, SBF should have been sentenced to thousands of years in prison. This is partially due to the enormous harm done to both FTX depositors and EA, but mainly for basic deterrence reasons; a risk-neutral person will not mind 25 years in prison if the ex ante upside was becoming a trillionaire.

However, I also think many lessons from SBF's personal statements e.g. his interview on 80k are still as valid as ever. Just off the top of my head:

- Startup-to-give as a high EV career path. Entrepreneurship is why we have OP and SFF! Perhaps also the importance of keeping as much equity as possible, although in the process one should not lie to investors or employees more than is standard.

- Ambition and working really hard as success multipliers in entrepreneurship.

- A career decision algorithm that includes doing a BOTEC and rejecting options that are 10x worse than others.

- It is probably okay to work in an industry that is slightly bad for the world if you do lots of good by donating. [1]

Just because SBF stole billions of dollars does not mean he has fewer virtuous personality traits than the average person. He hits at least as many multipliers than the average reader of this forum. But importantly, maximization is perilous; some particular qualities like integrity and good decision-making are absolutely essential, and if you lack them your impact could be multiplied by minus 20.

[1] The unregulated nature of crypto may have allowed the FTX fraud, but things like the zero-sum zero-NPV nature of many cryptoassets, or its negative climate impacts, seem unrelated. Many industries are about this bad for the world, like HFT or some kinds of social media. I do not think people who criticized FTX...

EA is very important to me. I’ve been EtG for 5 years and I spend many hours per week consuming EA content. However, I have zero EA friends (I just have some acquaintances).

(I don't live near a major EA hub. I've attended a few meetups but haven't really connected with ...

Perhaps you could start a group that does something slightly different? Or speak with your national organisation (if you have one) and collaborate with them in starting a national cause or profession-based group? Or a company group?

For example, in the Netherlands, in addition to our city/student groups, we have a policy and politics group, a new group at ASML, and a new animal welfare group. And then we also have the Tien Procent Club. They're inspired by Giving What We Can and run events focused on effective giving. They started in Amsterdam but the...

Epoch AI is looking for a Researcher on the Economics of AI to investigate the economic basis of AI deployment and automation. The person in this role will work with the rest of our team to build out and analyse our integrated assessment model for AI automation, research...

Thanks for posting this! In case you didn't notice, you haven't mentioned a deadline. (Wouldn't have thought it weird if you hadn't included the text "Please email careers@epochai.org if you have any questions about this role, accessibility requests, or if you want to request an extension to the deadline.")

My (working) Model of EA Attrition: A University CB Perspective

Background/Why I’m writing this post:

I've been co-organizing an EA student group at Queen's University in Canada for about a year now. When I first joined Queen's Effective Altruism (QEA) on campus as a general...

Solid piece. I like lists of things and I appreciate you taking the time to write one.

I sometimes wonder how to combine many qualitative impressions like this into a more robust picture. Some thoughts:

- Someone could survey groups on attrition rates

- Someone could ask how many people group leaders recalled who were in each group type

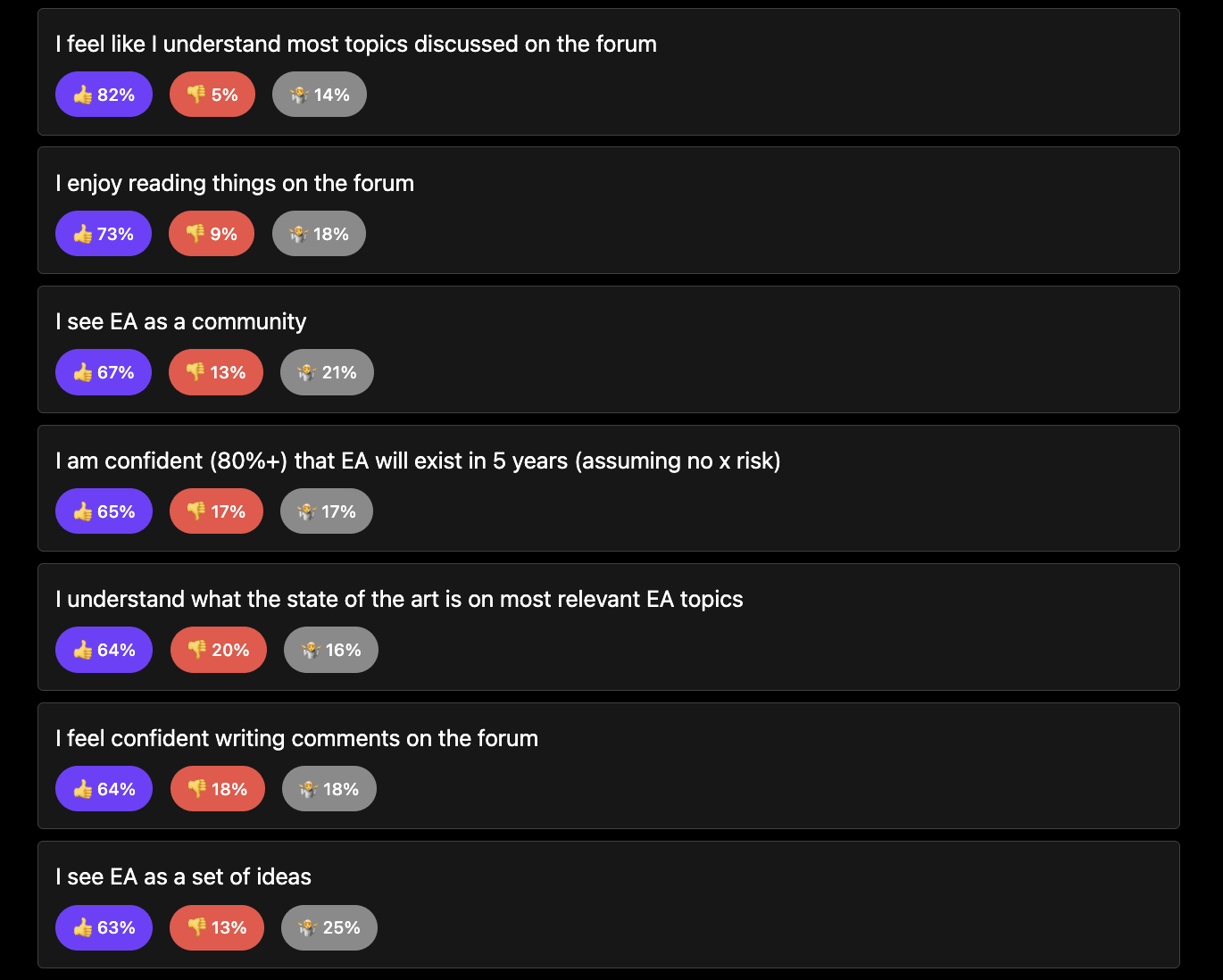

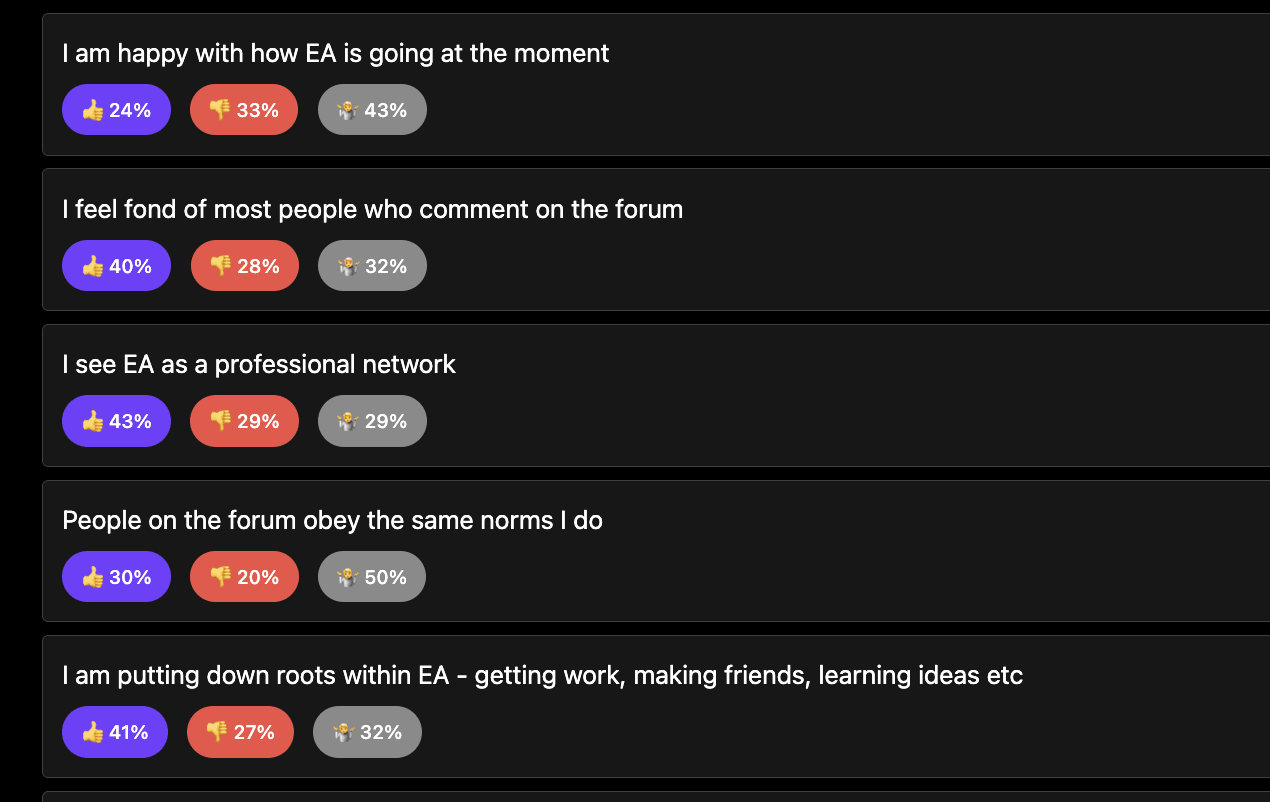

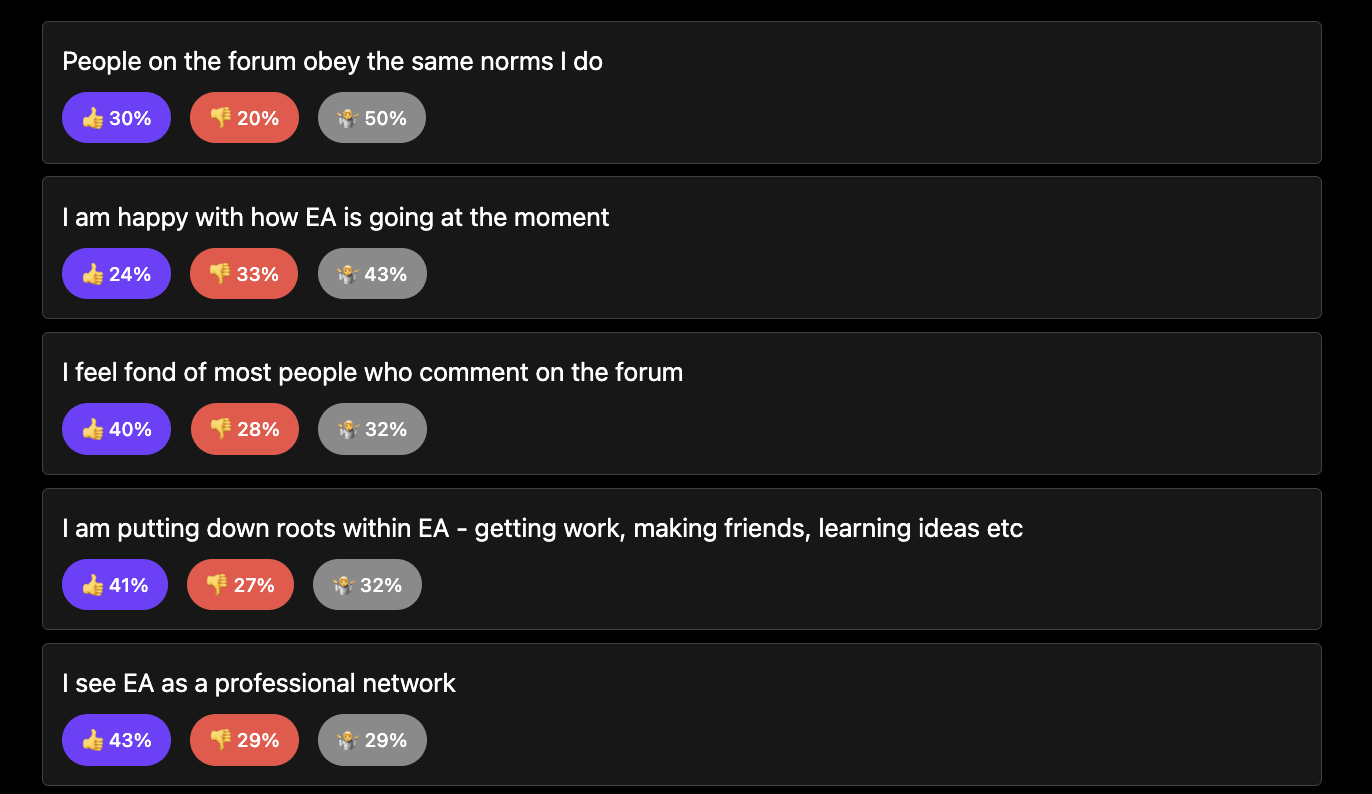

I make a quick (and relatively uncontroversial) poll on how people are feeling about EA. I'll share if we get 10+ respondents.

Currently 27-ish[1] people have responded:

Full results: https://viewpoints.xyz/polls/ea-sense-check/results

Statements people agree with:

Statements where there is significant conflict:

Statements where people aren't sure or dislike the statement:

- ^

The applet makes it harder to track numbers than the full site.

I've said that people voting anonymously is good, and I still think so, but when I have people downvoting me for appreciating little jokes that other people most on my shortform, I think we've become grumpy.

GPT-5 training is probably starting around now. It seems very unlikely that GPT-5 will cause the end of the world. But it’s hard to be sure. I would guess that GPT-5 is more likely to kill me than an asteroid, a supervolcano, a plane crash or a brain tumor. We can predict...

I really like the spectrum videos. I often think of how to get that kind of awareness of how we agree and disagree in an online setting. My tool viewpoints is one kind of push at this. viewpoints.xyz.

But there is something really fun about just seeing people share their views on specific points then move on.

I sense if we did it a lot we'd be a healthier community.