A Look into the myschool.edu.au Data

After overcoming a few problems I managed to write a scraper for the myschool.edu.au data. Unfortunately they choose to put data in HTML, so the scraping process may have led my data to have some unknown errors. I publish (see bottom) the scraped data as I believe that per the IceTV v Nine Network [2009] HCA 14 case, any data that my scraper produces as output from the HTML input is not subject to the copyright of the original HTML content (this also means that I cannot publish the HTML pages) and the Telstra Corporation Limited v Phone Directories Company Pty Ltd [2010] FCA 44 case, that the raw data that is scraped is not subject to copyright.

I wish I could bzip2 up all those HTML pages and give them to you just to save your download, because the myschool.edu.au site doesn’t compress their pages when I tell them I accept gzip over HTTP, so it took up almost 2GB of quota to download all the HTML pages, oh well.

Some preliminary statistics from the data.

- There are a total of 9316 (or 9279 after I ran a newer scraper at a later data) schools. Of these,

- 1538 are Secondary (of which 30% are non-government and 70% are government)

- 1407 are Combined (of which 68% are non-government and 32% are government)

- 6054 are Primary (of which 23% are non-government and 77% are government)

- 317 are Special (of which 15% are non-government and 85% are government)

- So,

- 6451 are Government (69%),

- 2865 are Non-government (31%)

- These 9316 schools contain a total of 3 366 351 students of which,

- 1 745 224 are male (51%)

- 1 651 127 are female (49%)

- The most schools in 1 postcode is 40, which are all in the postcode 2480.

- The average student attendance rate is 92.007%

- 91.870% for Government, 92.335% for Non-government

- 89.205% for Secondary, 92.982% for Primary, 90.675% for Combined, 89.170% for Special.

- There are a total of 265 960 teaching staff (full time equivalent of 241 408) and 124 117 non-teaching staff (full time equivalent of 86 511.9).

I could report a lot of stats like these above, all you need is a basic knowledge of SQL, but as much as I enjoy working out these stats I find graphs and graphics much more intuitive, so that is up next. Because of the vast dimensions to the data you can make all kinds of graphs so what would be best is a system to draw graphics dynamically which allows the user to decide what is graphed, but this takes more work so that is on the todo list.

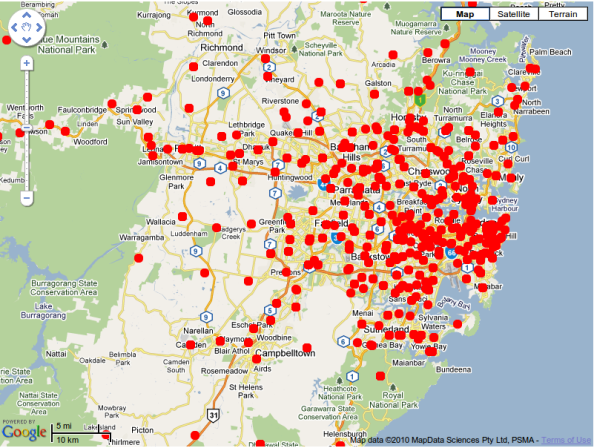

I’ve also looked into doing some heatmaps using the geographical location of the schools, I could have used Google Maps, or I could use OpenStreetMap and libchamplain. Both have pros and cons… But for now I used Google Maps because their API is simple and I’ve always wanted to experiment with it, the downside is I’m not sure about the copyright of their maps and subsequently any derivative works. This image is just a test showing a dot for each school in the system, but its very easy to change the colour, size and opacity of the dots based on features of the school.

Another test (some markers will be missing or in the wrong place, like the ones in NZ!),

Google Earth map showing markers for Australian schools (though not completely accurate). (Copyright notices in image)

Source code? http://github.com/andrewharvey/myschool

Don’t want to scrape and parse but want the raw data in a usable form? http://github.com/andrewharvey/myschool/tree/master/data_exports/

Extra thought: Currently the code uses Google’s API for geting the geolocation of the school, I could use OpenStreetMap for this also, however it would take more investiagtion to determine what tools exist. At the moment all I know is I have an .osm file of Australia, but schools aren’t just one dot, they are a polygon so unless I find some other tools which probably exist, I would need to (probably) just use one of the points in the polygon.

Or I could used the Geographic Names Register for NSW, but that is just for NSW… http://www.gnb.nsw.gov.au/__gnb/gnr.zip

this is amazing work Andrew! thanks for sharing the scraper code and the data.

Nice work Andrew. It would be interesting (and somewhat controversial?) to match up this data with house price data (matched on postcode I guess). Surely you’d expect some correlation, particularly for non-selective government schools. The outliers (good schools with cheaper house prices) might see a rise in house prices over the next year.

Had a quick look at the data but cant see the test results… Can this data also be scraped? That would be the most intersting data to plot…

Have a look at http://github.com/andrewharvey/myschool/tree/master/data_exports/ there is newer data there, including the NPLAN results. Currently the files in that directory are just tab separated files of the exported database tables.

Depending on your skill-set you might prefer the database as an SQL dump. That is now in the github repo (omitting the HTML pages as they are huge and also because I’m not sure about their copyright status).

I’m not in a position to make conclusions based on these facts, but I can try to get the facts out there. Do you know where one can obtain house price data (if such exists) or perhaps average incomes for different suburbs?

Ps. The geolocation data in those datasets is not completely accurate all the time. So probably best not to use it yet (although postcodes should be correct).

House price data can be found at http://www.homepriceguide.com.au/snapshot/index.cfm?source=apm. They also have demographics, but that is merely the census data which is publicly available info.

BTW I was able to load the dump into postgres after changing the type ‘pc’ to ‘integer’. Will see if I can come up with some interesting graphs over the next week or so 🙂

Hi Andrew, I’m currently reinventing the wheel scraping the website (just for fun) however using Python not Perl. I’ve had a look at your code and I really find Perl hard to follow. Can you have a look at this post and see what I might be doing wrong?

http://stackoverflow.com/questions/2507280/having-trouble-scraping-an-asp-net-web-page

Hi Andrew, great work on getting this data. Did you happen to do this with the MySchool 2.0 data too by any chance?

P